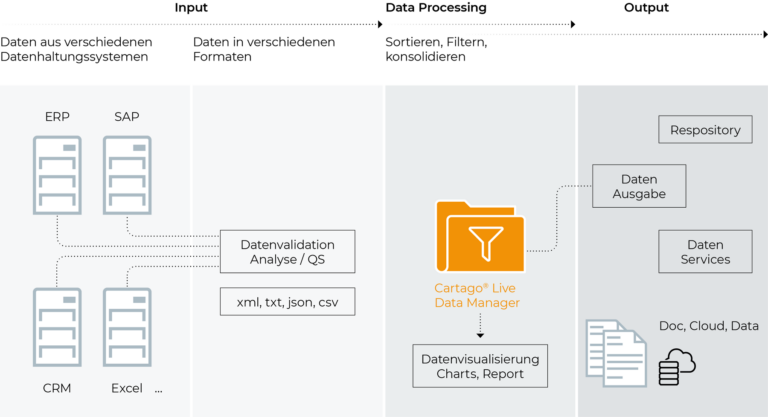

Data inputs, processes and outputs

Automated and standardised data management

Data collection (data warehouse)

Data collection is the process of connecting the organisation’s existing system to the Cartago solution. The Cartago®Live Data Manager is able to integrate data from a wide variety of sources in many different ways. The data can be transmitted directly from the existing system (push) or requested at a specific time (pull), depending on the requirements. If necessary, the data can also be reloaded during data processing. Cartago offers preconfigured data collection interfaces for a variety of CRM, ERP and other sector specific systems, including ones used in insurance and personnel management. Following minor project-specific adjustments, these systems can be up and running within a short time frame.

Data validation (analysis/quality assurance)

All data inputs are checked with respect to their syntax and content. This will also involve keeping a record of the data validation process. Subsequent workflows are either paused or highlighted to be “reloaded” later, depending on the settings.

Data processing

The next step is to process the data. This is done in a single or, where appropriate, multi-level process, either individually or as data packets. The data are ready for further processing as soon as they have been converted into the designated structure. Data can also be filtered, transformed, cleansed or consolidated.

Optional: Data visualisation (reports & reporting)

After data processing, or even data output, you can choose to have the data displayed visually. Visual elements are great for representing information such as utilisation profiles or frequency. They can also be seen as a convenient way of keeping a check on your data. The module can be used to generate a load profile for the hardware you are using in the cloud (licensing). It can, for instance, provide online retailers with an accessible way to see when most of their orders are placed. This would help them know when they need to scale up their capacities to cope with increased traffic. Having the right technologies at your disposal at exactly the right time improves efficiencies and achieves cost savings. After all, you only want to be paying for the services you are actually using.

Data outputs

The outputs from the system are the inputs for the document generation. An automated process issues the data in an independent output format. Invoicing data, for example, is issued in the ZUGFeRD XML data format, the current standard for electronic invoices in German speaking countries.

Data provision (repository)

The repository is the data warehouse within which the data is physically held. This central data repository may hold anything from a company’s General Terms and Conditions of Business through product images and other imagery in different resolutions to videos and PDFs. Authorised persons or third party systems can access the repository and utilise the data at any time. Cartago’s repository offers a data storage system that is based on a service-oriented architecture (SOA) with a web-based interface (Thin Client).

Key benefits

- Highly efficient customer communication processes

- Fully automated document creation processes

- Synchronisation of different data sets

- Data standardisation and structuring

- Data security in accordance with GDPR

- Standard and customised solutions

- Standardised interfaces for SAP and Microsoft Excel

- Integration into any existing software architecture

Technical information

Cartago services

User interface

Data output

Data collection